How MongoDB Cut PR Size by ~50% and Improved PR Cycle Time with Optimal AI

MongoDB’s Internal Tools team replaced manual reporting scripts with Optimal AI, resulting in smaller Pull Requests, faster reviews, and trusted data.

Reduction in PR Size

~50%

Smaller, reviewable changes → faster approvals + fewer merge conflicts.

Faster PR Cycle Time

~30%

Clear visibility into root causes → quicker unblocking.

Centralized Engineering Visibility

100%

From Jira to GitHub → one place for metrics teams trust.

See why engineering leaders at high growth companies use Optimal AI

“Optibot cut our PR review time nearly in half. Our team finally has visibility into what's slowing us down and our engineers love the AI summaries and suggestions. This is the first AI tool we've used that actually feels like part of the team.”

Lila Brooks

Software Engineering Manager, MongoDB

MongoDB is a leading developer data platform designed to help teams build, run, and scale modern applications faster. At its core is the MongoDB Atlas cloud database: a fully managed, flexible, document-oriented database that stores data in JSON-like structures instead of rigid tables.

Industry

Developer Platform / Database Software

Company size

5K - 10K

Pain point

Script- and spreadsheet-based reporting; low UX → low adoption; limited visibility into investments and PR health

Product used

Optimal AI Insights (engineering analytics)

Location

San Francisco

Quick metrics

PR size ↓ 50%

Cycle time ↓ 30%

Visibility 100%

Cut Your Code Review Cycles by 50%

Get unlimited, full-context reviews free for 14 days. Install in 2 clicks.

The Problem

Unreliable Data and Manual Work

MongoDB’s Internal Tools team faced a visibility problem. They relied on home-grown scripts to track engineering metrics, but the system was fragile.

- High Maintenance: Engineers spent time fixing data scripts instead of building features.

- Low Trust: Because the data was difficult to aggregate manually, the team didn't trust the numbers.

- No Root Cause Analysis: They knew reviews were slow, but couldn't see why. They lacked the granularity to see if time was spent on tech debt, bugs, or features.

“We were using scripts folks had written, dumping data into Excel, then producing higher-level dashboards… it was never easy to use.”

Lila Brooks

Software Engineering Manager, MongoDB

The friction showed up everywhere: metrics were slow to produce, hard to trust, and inconsistent across org levels. Adoption lagged because switching views, filtering by teams/people/activities, and answering simple questions took too much effort.

Without a single source of truth, it was difficult to:

- See where time was going (tech debt vs. bugs vs. discovery vs. feature work)

- Understand where PRs were getting stuck and why cycle time was high

- Spot patterns and unblock issues before they affected delivery

The Solution

Automated Insights and Root Cause Detection

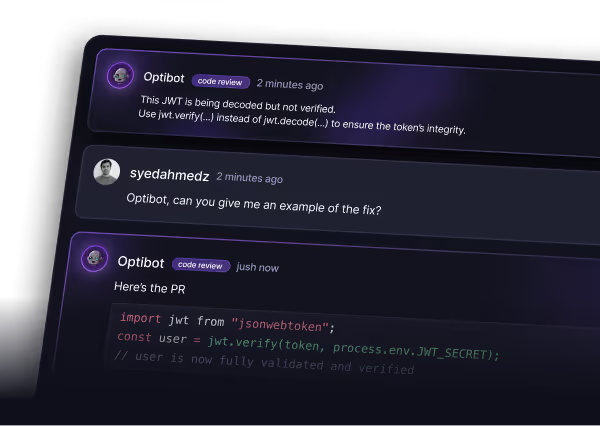

MongoDB deployed Optimal AI to create a single source of truth without the manual upkeep. By integrating directly with Jira and GitHub, the platform immediately surfaced the root cause of their speed issues.

The Finding: The data showed a direct correlation between slow cycle times and large Pull Request (PR) sizes. Because PRs were too large, reviewers were hesitant to review them, causing code to sit idle.

The Fix: Instead of just "monitoring" velocity, the team used the Investments view to categorize effort (Tech Debt vs. Features) and focused on reducing batch sizes to unblock the pipeline.

“We now have a single place to see all the metrics. We can filter by teams, people, activities without writing or maintaining code. The UX is smooth, so adoption improved from engineers up to directors.”

Lila Brooks

Software Engineering Manager, MongoDB

With shared visibility, leaders and ICs could pinpoint large PRs, see who/why, and run the right conversations to create quick wins—like reducing PR size and improving deployment frequency.

The Results

The Impact at a Glance

- ~50% Reduction in PR Size: Smaller changes led to fewer merge conflicts and faster reviews.

- ~30% Faster PR Cycle Time: Accelerated feature delivery and release cadence.

- 100% Centralized Visibility: Replaced manual scripts with a single, automated view of engineering health.

1. Data drove behavior change

Once the team saw the objective data on PR sizes, they adapted immediately. They began breaking work into smaller chunks to smooth out the review process.

2. Speed through simplicity

Average PR size dropped by ~50%. Because the code changes were smaller and easier to review, the PR Cycle Time improved by ~30%.

By switching from manual scripts to Optimal AI, MongoDB turned visibility into action. They stopped guessing why reviews were slow and used data to fix the core behavior, resulting in a faster, more efficient engineering team.

“We were able to get the size of our PRs much smaller, nearly 50% and improve our PR cycle time by understanding why review was taking time.”

Lila Brooks

Software Engineering Manager, MongoDB

The Impact in Numbers

Before and after metrics for MongoDB’s team using Optimal AI

Real numbers verified by the leaders using the tech

Metric

Before

After

Improvement

PR Size

Before Insights

Large, inconsistent, hard to review

After Insights

Right-sized, easier to review

~50% reduction

PR Cycle Time

Before Insights

Slow on large PRs; unclear drivers

After Insights

Root-cause visibility; faster reviews

Improved cycle time

Engineering Metrics Access

Before Insights

Custom scripts + CSV/Excel + manual dashboards

After Insights

Single system; fast filters by team/person/activity

One source of truth

Investment Visibility

Before Insights

Fragmented view of effort

After Insights

Investments by tech debt, bugs, discovery, features

Clear focus areas

Jira Integration

Before Insights

Manual rollups; limited blockage insight

After Insights

Effort by epic/initiative; blocked-work detection

Fewer surprises

Adoption & UX

Before Insights

Low adoption; hard to switch views

After Insights

Engineers → Directors use shared dashboards

Org-wide adoption

Deployment Frequency

Before Insights

Held back by large PRs

After Insights

Smaller PRs enable faster releases

More frequent deploys

Artemis Ops x Optimal AI

How Artemis Ops Cut Review Time by 20% with a Lean Team Using Optibot

Prado x Optimal AI

How Prado 5×’d Deploys and Cut Review Time by 30% Using Optibot + Insights

Prometric x Optimal AI

How Prometric Saved Weeks of Manual Reporting Time With Real-Time Engineering Insights in Optimal AI